The content on this page has been converted from PDF to HTML format using an artificial intelligence (AI) tool as part of our ongoing efforts to improve accessibility and usability of our publications. Note:

- No human verification has been conducted of the converted content.

- While we strive for accuracy errors or omissions may exist.

- This content is provided for informational purposes only and should not be relied upon as a definitive or authoritative source.

- For the official and verified version of the publication, refer to the original PDF document.

If you identify any inaccuracies or have concerns about the content, please contact us at [email protected].

Thematic review: Hot Review Processes

The FRC does not accept any liability to any party for any loss, damage or costs howsoever arising, whether directly or indirectly, whether in contract, tort or otherwise from any action or decision taken (or not taken) as a result of any person relying on or otherwise using this document or arising from any omission from it.

© The Financial Reporting Council Limited 2024 The Financial Reporting Council Limited is a company limited by guarantee. Registered in England number 2486368. Registered Office: 8th Floor, 125 London Wall, London EC2Y 5AS

1. Executive Summary

Hot reviews are a preventative control over audit quality that provide 'real-time' quality support by identifying and remediating key issues before an audit opinion is signed. Hot reviews are not specifically defined or required by auditing standards. In this thematic, we have defined the hot review process to be an internal independent review of in-progress audit work performed by auditors, and specialists or experts, where applicable.

Hot reviews provide independent challenge, intervention, coaching and support to audit engagement teams, focusing on complex and judgemental areas of audits. Proportionate, appropriately scoped and effectively performed hot reviews can support timely identification and sharing of common findings and good practice with the wider audit practice.

The FRC has analysed hot review processes across the seven Tier 1 audit firms1 as at April 2023 (the "firms"). The findings support the FRC's supervision of the firms' implementation of International Standard on Quality Management (UK) 12 (ISQM (UK) 1 or 'the standard'), specifically the Engagement Performance component in the standard. The findings of this thematic also provide insight to all audit firms by sharing the characteristics of an effective hot review programme in our role as an improvement regulator.

ISQM (UK) 1 introduced a new quality management approach focused on proactively identifying and responding to risks to quality. Firms must establish quality objectives, identify and assess the risks to these objectives, and design and implement policies or procedures to address those risks.

All firms subject to this review have hot review programmes in place, though at one firm, a full risk-based hot review programme has only recently been rolled out in Autumn 2023. Six firms identified hot reviews as an ISQM (UK) 1 response addressing the risks of being unable to deliver quality engagements or identify, monitor and remediate deficiencies in their systems of quality management on a timely basis.

The key findings in this thematic that firms should be aware of and take steps to address are:

- Not all firms set out sufficiently detailed review timeframes to make it clear for both reviewers and engagement teams on when key milestones are expected to be completed, e.g., issuing and responding to comments raised.

Some good practice observed in this thematic are:

- Only one firm has clear operational metrics to monitor the status of ongoing hot reviews. Relevant, timely and comparable management information allows firms to quickly identify audits that require more support or escalation, as well as trends, themes and emerging risks that may impact audit quality

- Not all firms require the reviewer to check whether remediating actions have been appropriately taken. Firms should consider clearly outlining which review comments require clearance by the hot reviewer before an audit opinion is signed.

- Not all firms provide reviewers with guidance, aide memoires or work programmes. Such documents support reviewers to exercise appropriate judgement and promote consistency of how hot reviews are undertaken.

- Provision of broader training for hot reviewers, for instance, soft skills training, buddy system and assigning subject matter expert roles amongst reviewers.

- Reviewers undertake a learning and de-brief process to compare inspection findings across hot and cold reviews, where an audit was subject to both.

- The use of a single digital database to record hot review comments centrally and hold the data collated from hot review findings. This facilitates real-time interaction between reviewers and engagement teams, monitoring of review comments and identification of themes.

2. Scope

This thematic covers the hot review processes that have been operational for audits with 2022 year-ends onwards and includes:

- Planning of firms' hot review programmes, including how hot review selections are made;

- The process of performing a hot review, including scoping and review timeframes;

- Identification, classification and evaluation of findings identified in hot reviews;

- Selection, training and allocation of hot reviewers; and

- Measuring effectiveness of hot reviews.

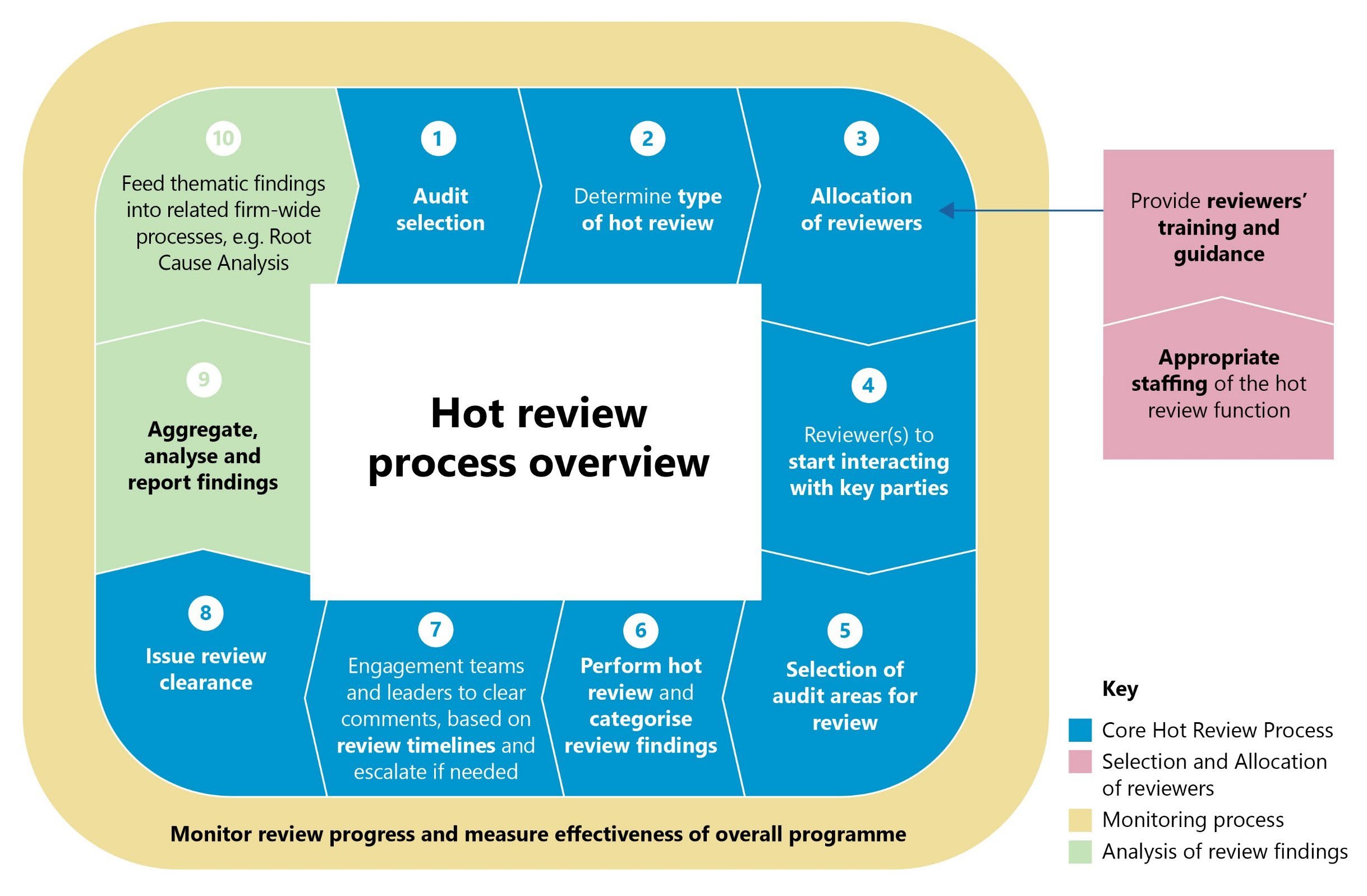

Illustration of a typical hot review process.

Key

- Core Hot Review Process

- Selection and Allocation of reviewers

- Monitoring process

- Analysis of review findings

3. Audit selection and types of hot reviews

Why audit selection for the hot review process is important?

Appropriate selection of audits for hot review ensures resources and support are focused on the audits which need them most to effectively mitigate the risks of poor audit quality.

Observations: The scope of audits subject to hot review varies across firms. At all firms, audits within the scope of the FRC's AQR inspections3 are subject to selection. Audits outside AQR scope are also subject to hot review selection at three firms, which allows coverage of a wider range of risks and broader population of audits and engagement leaders, to improve consistency in audit quality across the portfolio of audited entities.

At two firms, all audits in AQR scope are selected for hot review annually unless there are exceptional circumstances.

In the 2022/23 inspection cycle the number of hot reviews performed and coverage achieved over audit portfolios varied significantly across firms. This was driven by reviewer resourcing capacity and the depth of reviews undertaken. The number of hot reviews of individual audits undertaken at firms varied from three to 210, with 80 being the median number performed.

Cyclical approach

A cyclical approach to selecting audits, alongside consideration of risk factors, can support good coverage of audits and engagement leaders and maximise use of reviewer resources.

Observations: Five firms use a cyclical approach to audit selection, with three firms adopting one specific to FTSE 350 audits. At one firm, FTSE 350 audits are selected for hot review at least once every two years, whilst at another firm this is at least once every three years. At the remaining firm, FSTE 350 audits undergo a hot review if there has not been an AQR review or hot review in the past three years.

At another firm, high-risk non-AQR audits are selected for hot review on a 3-year cycle, where the fee is above a certain level.

At two firms, engagement leaders are selected for hot review on a cyclical basis. At one of these firms, they are selected once every three years.

Selection risk factors

Using a range of risk factors to select audits for hot review helps ensure audits with high risk profiles receive support. Risk factors should be reviewed periodically to ensure they reflect the firm's audit quality risks, recent inspection findings, reputational risk factors, changes to the portfolio of audited entities, and any external emerging risks to audit quality.

Observations: Most firms have a formal framework setting out the list of risk factors to be considered. The table on the left summarises the risk factors applied across firms when selecting a sample of audits for hot review.

| Risk factor | Firm A | Firm B | Firm C | Firm D | Firm E | Firm F | Firm G |

|---|---|---|---|---|---|---|---|

| First year audits | √4 | √ | √ | √ | √ | √ | √ |

| Coverage (Responsible Individuals / office) | √ | √ | √ | √ | |||

| Priority sectors5 | √ | √ | √ | ||||

| AQR/internal inspection history | √ | √ | √ | √ | √ | ||

| Retiring engagement leaders | √ | √ | √ | ||||

| New engagement leaders | √ | √ | √ | √ | √ | ||

| Last year audits | √ | √ |

The most common selection risk factor is first-year audits as all firms recognise the greater level of risk involved when auditing opening balances.

Another common risk factor is audits with unsatisfactory prior year inspection results as hot review of the areas where findings have been identified can help engagement teams and leaders learn from mistakes, and ensure that remediating actions have been taken.

Other risk factors considered include economic or industry specific risks, risk over going concern and concerns about specific audited entities.

At three firms, selection risk factors are reviewed on an annual basis.

Adjustments to audit selections

Audit selections should be reviewed during the inspection cycle to consider if adjustments are needed. Firms should balance upfront audit selections, to enable proactive planning and resources management with responsiveness to requests for support from engagement leaders, and changes arising at audited entities or in the macroenvironment, e.g. governance issues at audited entities, or increase in going concern risks for certain sectors due to macroeconomic factors such as natural disasters or war.

Observations: Most firms make hot review selections annually and do not formally re-review their selections. This increases the risk that they may not review audits where the quality risks have increased during the year.

Good practice: At one firm, audit selections and risk factors are formally reviewed every four months (with effect from November 2022) to consider any changes to engagement leaders, the level of risks assessed for individual audits and any emerging risks.

Making hot review requests

Engagement teams and leaders should be encouraged to proactively assess risk and, where appropriate, request a hot review. This can be achieved through clear tone from the top with messaging around the benefits of hot reviews and by creating a culture of continuous improvement and commitment to quality. Firms should consider such requests and adjust their review selections where needed.

Observations: Almost all firms have processes for engagement leaders to request a hot review or raise an 'emergency' hot review request where a concern is raised late in the audit process. At some firms, audit executives or the central risk function can also request a hot review where issues are identified on specific audits.

Types of hot review

Firms determine the type of hot review to be performed for each audit selected. This is usually performed by the firms' central audit quality team at the time of the audit selections.

Observations: Five firms have at least two different types of hot review for individual audits: (i) full scope and (ii) focused/light scope. At the remaining two firms, the scoping of a review varies by audit and typically includes key areas of planning and completion, as well as significant risk audit areas.

The usual differences between full and focused/light scope reviews are:

| Full scope | Focused/light scope | |

|---|---|---|

| Description | Detailed review of a wide range of audit areas and workpapers. | More selective or reduced review scope. |

| Areas scoped in for review: | ||

| Key audit planning | Yes, e.g., materiality, risk assessment and scoping, independence, fraud considerations and general planning procedures | Yes, but less than a full scope with a focus on materiality, risk assessment and scoping and fraud considerations |

| General IT controls (GITCS) | Yes, where necessary | Usually not scoped in |

| Fraud and significant risk | Yes, though number of areas varies | A limited number of areas |

| Key audit completion | Yes, e.g., audit opinion, audit committee communication, summary of issues/misstatements | Yes, but less than a full scope with a focus on audit opinion and audit committee communication |

Observations: Three firms limit the number of significant risk audit areas covered on full scope reviews to three to six, depending on the size and complexity of the audit. The other firms have not set limits.

One firm performs light scope reviews on all audits inspected by AQR in the previous cycle to cover the audit areas where AQR findings were identified.

In determining the split between full and focused/light scope hot reviews, firms should balance resourcing capacity with coverage of the audit portfolio and being able to perform sufficiently comprehensive hot reviews on high-risk audits.

Observations: The ratio of full to focused/light scope hot reviews performed varies between firms. Most firms performed more full scope reviews (50% - 94%).

4. Hot review process

4.1 Selecting audit areas for review

Why is scoping for individual reviews important?

Appropriate selection of audit areas for review ensures focus on the areas of highest risk. Reviewers should have access to guidance on what to consider when scoping their review, including any focus areas mandated or centrally selected for the current inspection cycle. These focus areas should reflect emerging risks to audit quality and may be based on key findings from the prior year's internal and external quality inspections, root causes analyses outcomes and developments in accounting and auditing standards.

Good practice: At one firm, culture of challenge, potential biases, audit documentation and audit language are mandatory review areas for all hot reviews. Reviewers receive guidance on identifying potential biases that may affect engagement teams' judgements, reviewing the appropriateness of the language used in audit documentation and assessing if there is sufficient evidence of challenge of management.

This helps ensure reviewers check that professional scepticism has been exercised by engagement teams, and that engagement teams' challenge of management is specifically and clearly evidenced on audit files.

Observations: Three firms have a set list of audit areas expected to be scoped in on hot review for individual audits. One of these firms, requires reviewers to document an explanation if any suggested areas are excluded, whilst at another firm, the list is mandatory for the current cycle and is based on key historic internal and external findings, including group audits, impairment of assets, risk assessment, journal entry testing and cashflow statement.

At some firms, some audit areas are identified as mandatory, even if not deemed to be a significant risk. For example, cash at one firm and going concern at another firm.

The remaining firms do not have a set list, but do have expectations on the nature and number of audit areas to be covered. The most common areas included are:

- Materiality

- Risk assessment

- Fraud risks

- Significant risks (and revenue)

- Group audits, if applicable

- Reporting and communication with Those Charged with Governance (TCWG)

At one firm, reviewers receive guidance on factors to consider significant risks when scoping hot review areas. These include the extent and significance of historic review findings, the significant risks covered in the last hot review, and changes to the entity's risk profile.

Example: Consideration of climate-related risks in hot review

A technically challenging and fast evolving area is the impact of climate-related risks on financial statements, as this can present a material risk of misstatement. Reviewers should receive guidance to assess if teams have considered the potential materiality of climate-related risks and if relevant risks have been appropriately addressed. For example, for certain entities, reviewers may need to assess if valuation risks, arising from exposure of assets to physical threats such as flooding have been adequately considered and addressed.

Observations: At four firms, climate-related risk has been scoped in as a focus area in hot reviews on individual audits. At three of these firms, aide memoires or checklists for climate-related risks have been developed to support reviewers.

At one firm, ESG specialists in the central audit quality team will be consulted if reviewers identify an audit area impacted by ESG risks.

At the remaining two firms, climate-related risk is not identified as a focus area for hot reviews.

Scoping of a review of a group audit

Group audits have been an AQR focus area due to recurring inspection findings. Group audits with a large number of geographically dispersed subsidiaries and a complex corporate structure can present auditors added challenges. These include assessing compliance with the UK Ethical Standard, assessing the impact of any differences in accounting policies, understanding laws and regulations in different jurisdictions, manging the scope and timing of component auditors' work, and reviewing and challenging of this work. Therefore, it is key that hot reviewers scope and conduct their review of group audits appropriately.

Observations: At all firms, when a group audit is reviewed, significant components may be scoped in if the component audit team is UK based. Significance can be determined based on the size, complexity, and location of the components, and if they give rise to any significant risks at the group audit level. If the component audit team is not UK based, the group audit team's oversight of the component auditor's work will be scoped in for review.

At two firms, reviewers receive specific guidance on reviewing group audits. At one firm, reviewers receive guidance on the key factors, e.g., materiality, to consider when scoping in components. At another firm, reviewers receive a checklist of minimum points to consider when reviewing group oversight, e.g., to check if the planned level of oversight reflects the size and significance for each component scoped in and if the group engagement team has evidenced the challenges made to the component auditors to demonstrate oversight.

4.2 Interaction with key parties

a) Engagement teams, leaders, and Engagement Quality Control Reviewers (EQCRs)

Ongoing interaction between reviewers and engagement teams helps reviewers stay aware of audit progress and any emerging issues. Reviewers' attendance at key meetings, e.g., meetings on audit planning, risk assessment and audit completion, enables more timely challenge and support.

Observations: Reviewers at all firms are expected to engage with engagement teams as soon as the audit selections and reviewer allocations have been approved, and before the planning stage of the audit commences.

Firms' expectations for reviewers' attendance at audit team meetings vary.

At most firms, reviewers hold kick-off or audit planning meetings on risk assessment and audit approach with engagement teams to plan the hot review, including discussing scoping and agreeing a review timeline. At one firm, reviewers' attendance at the audit approach meeting on significant audit risks is one of the requirements of the hot review process. At four firms, reviewers are expected to attend closing meetings with engagement teams prior to issuing review clearance and audit sign-off.

All firms expect reviewers to interact with EQCRs throughout the review process. At four firms, reviewers are specifically encouraged to arrange separate conversations with the EQCRs if they have particular concerns. At one of these firms, the extent of communication between reviewers and EQCRs is set out in the reviewer guidance document.

Good practices: * At one firm, reviewers share a standard agenda and a briefing pack with engagement teams at their first planning meeting. This drives consistency in engagement team's expectations of the process. The agenda includes the objectives of the review, discussion of the significant audit risks, initial scoping for the review, and the review timeline. * At one firm, engagement teams use a presentation template for the first planning meeting with the reviewers to ensure consistent coverage of key matters. This includes a business overview, audit risks and materiality, internal controls, and significant risks.

b) Management of audited entities

Hot reviewers are not expected to have any engagement with the management of audited entities. This allows them to maintain independence and objectivity in their role.

4.3 Review timeframes

Why is a central review timeframe important?

Setting timeframes for when reviewers and engagement teams are expected to raise and address comments for different audit phases drives timely engagement and helps ensure significant issues are identified and addressed before the next audit phase. This is particularly important when identifying and resolving concerns at the audit planning phase. Set timeframes also helps reviewers and engagement teams plan and manage their time appropriately to avoid undue pressure.

Firms should monitor whether reviewers and engagement teams are achieving the target timeframes and periodically evaluate if adjustments are needed to ensure they remain achievable and able to motivate the desired behaviours from both reviewers and engagement teams.

Observations: Firms' approach to setting review timeframes, and the granularity of any such timeframes, vary:

(i) Timeframe for reviewers to complete hot review

| Audit Phase/Firm | Firm A | Firm B | Firm C | Firm D | Firm E | Firm F | Firm G |

|---|---|---|---|---|---|---|---|

| Planning | No central timeframe set for reviewers, but review slots are scheduled with support from the project management team within the hot review function | Within 5 business days of the EQCR review | One month before the fiscal year-end of the audited entity | By the deadline agreed between reviewer and engagement team | No central timeframe set for reviewers | Within 2 months of audit team's completion | No central timeframe set for reviewers |

| Execution | At least one week before sign-off date | No central timeframe set for reviewers | As agreed with the team, after engagement leader and EQCR review | ||||

| Completion |

(ii) Timeframe for engagement teams to deliver audit work for hot review/address comments

| Audit Phase/Firm | Firm A | Firm B | Firm C | Firm D | Firm E | Firm F | Firm G |

|---|---|---|---|---|---|---|---|

| Planning | Ready for review 2.5 months pre-year end | Comments to be cleared by the fiscal year-end of the audited entity | Comments to be cleared before the next audit phase begins | Comments to be cleared before the next audit phase begins | No central timeframe set as long as comments are cleared before clearance is issued and audit opinion is signed off | ||

| Execution | Ready for review at least 1-2 weeks before audit opinion signed | Comments must be addressed within 3 business days and prior to the close meeting | No central timeframe set as long as comments categorised as red and amber are cleared before clearance is given to the audit team prior to audit opinion sign-off | No central timeframe set as long as comments are cleared before audit opinion is signed off | Comments to be cleared before the next audit phase begins | Comments categorised as fundamental and significant must be cleared within 30 days of being raised | |

| Completion | Ready for review 4 days before audit opinion is signed off |

Example: At one firm, the timeframe set centrally for engagement teams is derived from the three key dates of the: 1. year-end of the audit subject to review; 2. issuance date of audit committee reporting, and; 3. audit opinion sign-off date.

The firm has set out the timeframe below for engagement teams to share work with the reviewer and address comments raised, within audit planning: 1. Audit plan – provided to the hot reviewer four working days before being issued to the Audit Committee; 2. Audit plan comments – addressed no later than 24 hours before being issued to the Audit Committee; 3. Audit planning – provided to the hot reviewer 2.5 months before year end; and 4. Audit planning comments – addressed no later than two weeks after the comments have been raised.

4.4 Monitoring progress of hot reviews

a) Red, Amber, Green (RAG) risk rating

Assigning risk ratings to ongoing reviews provides firms an indication if a review is on track or at risk and prioritise.

Observations: Five firms assign RAG ratings. At two firms, reviewers regularly assign RAG ratings to their ongoing reviews using factors such as review status against the planned timetable, audit status against audit quality milestone targets, any concerns about quality of work and involvement of engagement leaders, and the extent of the team's constructive engagement with the hot review.

At another firm, each milestone for when audit work should be ready for hot review is tracked and rated as either green (delivered according to timeline), or red if otherwise.

At another firm, ratings are assigned based on the review status of different audit phases, and the days until the deadlines agreed between the engagement teams and the reviewers. For example, a review would be rated red or amber if the review of audit planning was not cleared and there was less than 14 or 30 days until the audit planning deadline, respectively.

b) Regular meetings

Regular meetings in the hot review team at firms allows reviewers to discuss ongoing review progress, seek advice and input from others, and discuss forward planning.

Observations: All firms have hot review team meetings on a regular basis. At some firms, these are held more frequently during the busy periods to enable closer monitoring.

c) Timesheets

Accurately recording time spent on reviews provides firms with management information to identify reviews which are not progressing as planned and inform forward planning.

Observations: All firms record and monitor time spent on hot reviews using their timesheet systems. Data from the prior year is typically used to inform high-level planning for the next cycle of reviews. Firms tend not to budget for individual hot review. Time spent on a review varies across firms depending on the size and complexity of the audit, scope for the review and reviewer resourcing capacity.

d) Management Information

Good quality management information using relevant, timely and comparable data allows firms to identify reviews that are not progressing as planned, or reviews that may require more support, scrutiny or escalation, as well as trends, themes and emerging risks that may contribute to poor audit quality.

Observations: Five firms produce management information for monitoring hot reviews progress, with varying levels of format and details.

Amongst the five firms, at one firm, a number of operational metrics are used in monitoring hot review activities. They include the number of comments not addressed by engagement teams within 30 days, percentage of high-priority and significant comments raised during the period. Three firms produce relatively limited amount of management information, e.g., limited to a split of status of reviews, and a dashboard of specific concerning reviews.

e) Reporting to key executive and oversight bodies

Reporting management information to key decision-making bodies periodically allows accountable individuals and oversight bodies to be well-informed of the hot review progress, to challenge constructively and provide effective oversight, and assess for any emerging risks and impacts on audit quality at the firm.

Observations: Six firms have regular reporting in place summarising the progress of individual hot reviews to key executive and oversight bodies, however the level of details and formality vary by firm.

Good practice: At one firm, the RAG ratings for ongoing reviews are shared with audit business leaders and the senior audit leadership on a regular basis, so they can consider if appropriate mitigating actions are being taken. This ensures that senior leadership maintain oversight and accountability.

5. Hot review findings

5.1 Classification of review comments

Classifying review comments by severity and urgency allows engagement teams to prioritise their work and effort. Reviewers should receive guidance on how to categorise findings.

Observations: At six firms, review comments or observations raised are categorised. At three of these firms, two categories are used, whilst at the other three firms there are three categories.

Good practice: At one firm, comments are assigned a priority (fundamental, significant or moderate) and a type (audit approach, documentation, efficiency). Reviewers receive guidance on determining this classification. This dual classification helps audit teams plan appropriate remediating actions and determine the urgency of this.

5.2 Use of templates

Using a template to record hot review comments promotes consistency in documentation and allows reviewers to organise information in a structured way for ease of understanding by the engagement teams. This also increases efficiency in how hot reviews are undertaken and facilitates the comparison and analysis of findings raised across multiple hot reviews.

Observations: Six firms have templates for reviewers to use outside the audit file. At the remaining firm, a new template has been designed for the revamped hot review programme for 2023/24. Most firms use a spreadsheet format.

Good practice: At one firm, comments or observations raised are recorded centrally in a digital tool. Engagement teams have access to the review comments recorded on their engagement. This allows hot reviews to be undertaken from end-to-end on a single platform and supports real-time interactions between reviewers and engagement teams. This also enables real-time monitoring of review comments centrally, comparison between reviews and identification of themes.

5.3 Review resolution and escalation process

A formal resolution and escalation process provides an avenue to discuss and resolve disagreements with senior individuals independent of the audits being reviewed. Reviewers should be well informed of this process to enable it to be utilised appropriately.

Observations: All firms have resolution processes in place if disagreements cannot be resolved directly between an engagement leader and the hot reviewer.

At four firms, a technical/risk panel can be convened to resolve disagreements. At two firms, a multi-stage escalation process is in place to escalate up to audit business leaders if the issues are not resolved at the earlier stage. At the remaining firm, disagreements are escalated to the partner heading up the hot review function who can then consult with the head of quality & risk and other senior partners.

5.4 Review clearance

It is crucial to ensure hot review comments have been adequately addressed before the audit opinion is signed. Firms should clearly outline when, based on the severity of the review comments, reviewers are expected to re-review the audit files to ensure remediating actions have been taken.

Observations: At most firms, formal clearance from the hot reviewer is required before the audit is signed off. The process of issuing reviewer clearance varies between firms:

| Firm A | Firm B | Firm C | Firm D | Firm E | Firm F | Firm G | |

|---|---|---|---|---|---|---|---|

| Reviewers must check the remediating actions on the audit file prior to giving clearance? | √ | √7 | √7 | √ | |||

| Is formal clearance provided by reviewers before audit sign off? | √ | √ | √8 | √ | √ | ||

| Is clearance retained on audit file? | √ |

Reviewers are not required to check if the written responses to review comments have been adequately reflected on the audit files before providing clearance at two firms, irrespective of the severity of comments.

At one of these two firms, a pilot is being run where any hot review comments raised in the area of journals are subject to re-review of the audit working papers by the hot reviewer before hot review clearance is given. The objective of the pilot is to assess the practicalities, benefits and impacts associated with reviewers re-reviewing the audit work for all comments raised.

Good practice: At one firm, email clearance issued by reviewers to the engagement teams and leaders is required to be retained on audit files. Retaining the hot review clearance on audit files ensures review procedures are complete before audits are signed off.

5.5 Review outcomes

Hot reviews can be used to hold engagement leaders accountable for audit quality by grading the audit after the review is concluded. Care is needed to ensure this does not undermine the coaching and support aspects of the hot review process, or discourage individuals from requesting a hot review. This may include careful consideration of whether the review outcomes should impact how engagement leaders are appraised and rewarded.

Observations: Two firms assign an overall rating to completed hot reviews.

At one firm, the objective is to assess the overall impact of hot review on the audit and determine if the engagement leader should be reviewed again. After an audit is signed off, the hot review comments raised will be assessed by a moderation panel, chaired by the Director heading up the review team. The assessment is based on the nature of the findings, engagement teams' responses, preliminary causal factors identified, remediation plan and its status. Reviews are classified into three different groups (minimal, moderate, extensive) based on the level of intervention needed. This then drives the follow up, for instance, if the audit was rated as requiring extensive intervention during the hot review, the engagement leader will be subject to a cold review on a different audit, in the same review cycle.

At another firm, ratings are assigned based on the overall timeliness of the review, the quality of the audit work performed and the engagement between reviewers and the engagement team. The overall ratings are then used to support periodic monitoring and provide updates to the audit leadership executives.

5.6 Aggregation, analysis and reporting of hot review findings

Findings, individually or in aggregate, may indicate the need for further quality monitoring for certain audits or individuals, or indicate weaknesses in a firm's system of quality management. Findings should be centrally aggregated, analysed and reported on a regular basis to identify common and emerging issues and allow remediating actions to be taken. This may include improvement or updates to the hot review process, or a review of a firm's culture in the audit practice. This contributes to the effective functioning of the system of quality management at firms.

Aggregation and analysis of findings

Observations: Hot review findings are centrally aggregated and analysed at all firms on different bases of frequency (quarterly, monthly, weekly, ongoing). The hot review function is typically responsible for ensuring these are tracked and summarised. At one firm, aggregation of findings is performed quarterly, while at another firm, this is undertaken monthly.

Firms feed hot review findings or themes into their systems of quality management to identify where remediating actions are required.

This includes:

- At three firms, findings are provided to the root cause analysis (RCA) team for trend analysis, including comparison against the root causes identified from cold file inspections.

- At three firms, thematic/recurring findings identified in hot reviews are discussed periodically with the audit methodology and learning & development teams. This ensures hot review findings are taken into consideration when developing training materials and planning changes to methodology and guidance.

- At one firm, hot review findings are considered when updating or creating new audit standardised tools and processes, to drive consistency across audits. The findings are also reported to the head of audit quality to determine if changes are needed for audit methodology and training materials.

- At one firm, the hot review findings are provided on a monthly basis to senior members from audit quality related functions, e.g., audit methodology, RCA, risks, remediation. This group considers if there are any emerging quality thematic findings and if any new remediating actions are necessary or if any existing actions should be revisited. Actions are logged and tracked in this group.

Good practice: At one firm, the data collated from hot review findings is held on a single digital database which enables a more timely and efficient analysis process. The remaining firms use a spreadsheet format.

Reporting of findings

Key decision-making and oversight bodies at firms should be kept up to date with the recurring and emerging quality matters identified in hot review findings to enable informed and efficient decision making. This can be achieved by formal and regular reporting of relevant metrics and performance indicators that are correlated with the activities and outputs of the hot review processes.

Observations: Six firms have formal reporting of hot review findings. The frequency of reporting ranges from monthly to annually. The nature of reporting content and the level of detail in the reporting vary by firm.

Five firms report on themes from ongoing hot reviews; three firms report on the status and/or coverage of the current cycle of hot reviews; one firm reports on the status of reviewers' resourcing, and two firms report on a wide range of management information relating to hot reviews, including summary of key themes on audit technical matters, timeliness of deliverables ready for hot review, number of comments not addressed within a certain period of time.

Good practice: At one firm, the reporting pack issued to the executive body responsible for the overall audit quality at the firm contains a wide range of operational metrics and performance indicators reflecting the activities and outputs of the hot review team. This includes the year-to-date comparison of the top ten hot review observations raised, and the most commonly identified thematic observations for the month.

6. Hot review team structure

6.1 Size of the hot review function

The size of the hot review function varies across firms depending on the coverage and depth of their reviews, ranging from three to 42 full-time equivalents (FTEs), across staff grades from managers to partners. At most firms, the hot review function consists of mainly managers and senior managers, with oversight from a few directors and partners. At one firm, there are more directors than senior managers. At another firm, there is an equal split between senior managers and directors, and partners.

6.2 Composition of the hot review function

Hot reviews and audit work typically occur concurrently. Therefore, it can be particularly challenging for firms to allocate personnel from their audit practice to perform hot reviews, as the times of year when audit resourcing capacity is tight will also be the times when hot review support is needed the most.

Firms should review their resourcing needs for hot reviews on a regular basis, including for sector specialisms. This should be followed by considering the optimal ratio of reviewers drawn from the central audit quality team ("central team") and from the audit practice to minimise resourcing disruptions to the audit practice, maintain the effectiveness and timeliness of the hot review process, and create opportunities for cross-team learning and professional developments.

Drawing reviewers from the central team provides a depth of review experience, technical auditing knowledge, awareness of inspection and regulatory findings and ability to benchmark across audits. Moreover, this provides more certainty on reviewers' capacity to undertake hot reviews and respond to unexpected changes without having to manage conflicting audit delivery commitments.

Drawing reviewers from the audit practice enables them to obtain an understanding of the hot review process, expectations for high quality audits, and exposure to common themes and challenges on complex and judgemental audit areas. They can share learnings with the audit practice and promote the hot review process in more relatable ways when they return to the audit practice. Practitioners can also provide a valuable breadth of experience from resolving live accounting and auditing issues and challenges whilst carrying out audits.

Reviewers selected or nominated from the audit practice should have a strong level of technical and soft skills, a good understanding and record of quality audits, and be expected to have recent experience of undergoing an internal or external audit quality inspection. They should also have access to training tailored to the role of hot reviewer to ensure they understand what is expected of them as a reviewer.

Observations: At some firms, the hot review function uses a mix of reviewers from the central team and the audit practice. Individuals drawn from the audit practice usually perform hot reviews on a part-time or secondment basis.

| Firm A | Firm B | Firm C | Firm D | Firm E | Firm F | Firm G | |

|---|---|---|---|---|---|---|---|

| Central team | √ | √ | √ | √ | √ | √ | |

| Audit practice | √ | √ (until 2023/24) | √ | √ | √ | ||

| Offshore delivery centre | √ |

At the firm where offshore resources are utilised, they perform a preliminary review on certain workpapers in financial services audits under the supervision of onshore reviewers.

At the firm where only personnel from the audit practice undertake hot reviews, the firm is expanding its central team to allow all reviews to be done by the central team starting from the 2023/24 inspection cycle.

At one of the two firms that uses only central team resources, a secondment programme has been recently launched for individuals to join the hot review function from the audit practice for at least six months on full-time basis.

At the firms where reviewers are drawn from the central team and the audit practice, the split varies. At one firm, reviewers are mainly from the audit practice (57%), whilst at the other firms, only 13% - 32% of reviewers are drawn from the audit practice.

At one firm, a few new experienced hires and individuals returning to work from long-term leave have been assigned to perform hot reviews on a secondment basis before moving to the audit practice. This provides the individuals with an opportunity to refresh their audit knowledge and understanding of high-quality audits.

6.3 Allocation of reviewers to individual audits

Why is appropriate allocation of reviewers important?

Reviewers should be allocated to audits, based on their experience, sector specialism, seniority, conflicts of interest, and the size and complexity of the audit being reviewed.

Sector specialism

Allocation of reviewers based on sector expertise allows reviewers with in-depth knowledge and understanding of certain industries and audit risks specific to the sectors to provide targeted and specialised support. Most firms allocate reviewers based on sector specialisms.

Nonetheless, not considering sector specialism can help limit potential assumed sector knowledge. It also provides firms with more flexibility in deploying reviewers.

Observations: At three firms, sector specialism is not always a key consideration when allocating reviewers to non-financial services audits. At one firm specialism is required on reviews in the oil & gas and real estate sectors. At another firm, a second reviewer with more sector experience is assigned to support the lead reviewer if necessary.

Experience

An effective hot reviewer should be able to demonstrate their ability to exercise professional judgment, effective communication and influencing skills, confidence in handling difficult conversations, and willingness to constructively challenge engagement leaders. These skills are developed through experience of leading and performing complex audit work, managing audit staff, and interacting with senior audit partners.

Observations: Experience of hot reviewers varies between firms. At most firms, reviewers are at least senior managers. Only two firms allow managers to be the lead reviewers. At one of the two firms, managers can only perform thematic hot reviews if they have been reviewed externally and have evidenced the ability to perform high quality cold reviews previously.

Relative seniority

It is important to consider the relative seniority between engagement leaders and reviewers. Where reviewers are more junior, having a more senior reviewer oversee the review can ensure the reviewers receive appropriate backing and support.

Observations: At all firms, hot reviews are performed by a lead reviewer and overseen by a supervisor. A supervisor may undertake certain elements of the hot review, monitor the progress of the review against agreed timelines, and be the first point of contact for the discussion of contentious issues.

At two firms, a partner is assigned to each full-scope hot review to provide oversight. At the remaining firms, a director is typically allocated to each review.

Size and complexity of audits

Supporting reviewers may be allocated to a large and/or complex audit to provide additional resource or expertise within the planned timeline of the audit.

Observations: Depending on the size and complexity of the audit, the number of reviewers allocated to a review varies across firms, ranging from one to four reviewers.

Conflicts of interest

When allocating reviewers, familiarity with engagement leaders, engagement teams and the audited entities should be considered to reduce bias in hot reviews. This includes considering if the reviewer has worked with the engagement leader, and if the engagement leader has any significant involvement in the reviewer's performance appraisal process. This is particularly relevant where reviewers are drawn from the audit practice.

Rotating reviewers between audits on a regular basis also helps reduce bias.

Observations: At three firms, reviewers drawn from the audit practice are not assigned to review engagement leaders they work with or have worked with previously. At one firm, reviewers are required to confirm their independence on their system before they commence a hot review.

Most firms assign reviewers to different audits every year. At one firm, reviewers can review the same audit for three years at most, though they are expected to review different audit areas each year.

Use of specialists and/or experts

Reviewers should receive support and guidance to enable them to make the initial assessment of the need for specialists and/or experts when scoping for a review.

Observations: The extent of use of specialists and/or experts on hot reviews varies.

Most firms make some use of specialists and/or experts outside of the core audit practice on their hot reviews.

| Firm A | Firm B | Firm C | Firm D | Firm E | Firm F | Firm G | |

|---|---|---|---|---|---|---|---|

| IT specialists | √ | √ | √ | √ | √ | ||

| Tax specialists | √ | √ | √ | ||||

| Other specialists /experts | √ | √ | √ | √ |

IT specialist resources are assigned to support hot reviews across most firms. At one firm, there are IT reviewers within the hot review function dedicated to providing support on hot reviews. At another firm, IT specialists provide support if the audit approach placed significant reliance on IT controls. Another firm is currently piloting use of IT specialists on hot reviews. At one of the remaining two firms where IT specialists are not currently used, it is planning to assign them to provide support from the 2023/24 cycle.

Three firms use tax specialists to provide support on hot reviews. At one firm, they are used when there is a significant audit risk arising from tax matters.

Four firms also make use of experts and/or specialists in other areas, such as IFRS 9.

6.4 Reviewers' training

Why is reviewers' training important?

Reviewers should have technical knowledge, including of complex and specialised audit areas, and awareness of recurring inspection findings and common audit approach pitfalls. This should be accompanied by strong soft skills. Training tailored to the role of hot reviewers can drive consistent development of these skills and knowledge.

Observations: The extent of training offered to hot reviewers varies between firms

| Firm A | Firm B | Firm C | Firm D | Firm E | Firm F | Firm G | |

|---|---|---|---|---|---|---|---|

| Mandatory audit training | √ | √ | √ | √ | √ | √ | √ |

| Technical live briefing | √ | √ | √ | √ | √ | √ | √ |

| Shadow review | √ | √ | √ | √ | √ | √ |

These briefings aim to provide updates on technical matters, recent key findings from internal and external inspections, and an opportunity to discuss live issues with peers.

Reviewers at all firms are required to complete the same mandatory training as the audit practice. This ensures reviewers refresh their knowledge of technical accounting and auditing matters, maintain awareness of developments in accounting and auditing standards, and also the firm's own audit methodology, tools and software. At the firm where offshore resources are deployed in performing hot reviews, staff are required to complete UK specific training in addition to the firm's global IFRS and audit training.

At two firms, reviewers, from the audit practice and the central team, receive training materials specific to undertaking hot reviews. At one firm, each set of training materials focuses on a specific audit area, e.g., controls & service organisations, data & analytics, climate risks, sampling, going concern and revenue. At three firms, reviewers are provided with formal or informal learning and training session on climate-related risks.

Most firms provide induction session for new reviewers, including changes to the hot review process, for each review cycle. Reviewers at all firms are provided with regular technical live briefings. The frequency ranges from weekly to monthly.

Two firms offered soft skills training sessions tailored for hot reviewers. One firm delivered bespoke in-person soft skills training sessions to hot reviewers in the past year. This covered the coaching role of reviewers, practical matters relating to reviewers' interactions with engagement teams and behavioural psychology and biases. At another firm, a soft skills training course on different styles of interaction and decision-making processes has been planned specifically for hot reviewers for late 2023.

Good practices: * Shadow review: Most firms assign new reviewers to shadow more experienced reviewers for a period before undertaking a review on their own. This is a form of on-the-job coaching, allowing new reviewers to learn how to perform a hot review and benefit from the experience of a more established reviewer, who will identify development points and assess when they are ready to perform reviews independently. This helps establish supportive personal working relationships, facilitates knowledge exchange, and enables role-modelling of behaviours expected of a hot reviewer. * 'Buddy': At two firms, new reviewers are assigned a 'buddy' to help them integrate into the hot review team and provide support over the first few months. This creates an initial point of contact for the new reviewer, helps them get familiar with the hot review team, culture and processes, and promotes informal learning. * 'Topic Lead' or 'Subject Matter Expert' role: At one firm, some reviewers are allocated such a role, and develop enhanced knowledge of a particular audit area, e.g., climate risk. They are responsible for keeping up to date with new guidance, responding to technical queries, and identifying recurring or emerging issues from hot review and inspection findings.

6.5 Guidance, aide memoires and work programmes

Guidance documents can help set out the objectives, methodology and related processes, e.g., escalation and feedback processes, for hot reviews, and define the responsibilities of a reviewer. It can provide clarity on how a hot review should be undertaken and promote consistency and efficiency. Reviewers should ensure they are familiar with the guidance documents and apply them appropriately when performing hot reviews. Regular review of the guidance document is recommended to ensure any necessary changes to the hot review processes are reflected in the document on a timely basis.

Observations: Two firms have published centrally prepared guidance documents setting out how a hot review should be undertaken.

Good practice: At one firm, comprehensive hot review guidance was issued setting out the objectives, purpose, and processes for hot reviews. This includes how audits are selected, processes for the scoping, planning and execution of reviews, how findings are raised and evaluated, how reviewers' time should be managed, available reviewer support and training, performance measures for reviewers and annual reporting.

Example: At three firms, the aide memoire for sensitivity analysis in impairment assessments includes prompts to assess whether the audit team has defined a range of reasonably possible outcomes against which to compare management's assessment, performed reverse stress testing and considered downside scenarios in assessing sensitivities.

Work programmes provide reviewers with prescriptive review procedures on specific audit areas. Reviewers are typically required to document their detailed review and their conclusions of the appropriateness and adequacy of the work performed by the engagement teams.

Observations: Five firms have centrally prepared aide memoires and/or work programmes for hot reviewers. At one of the remaining two firms, work programmes will be rolled out for the 2023/24 hot review cycle.

The format, style and level of detail varies. Two firms include references to their audit methodology, audit work programmes and knowledge library in their aide memoires so that reviewers can assess to what extent the audit work performed by engagement teams has followed the centrally developed methodology and work programmes.

At two firms, a separate aide memoire was issued for financial services audits.

Good practices: * At one firm, the aide memoire includes prompts on when to escalate matters for certain audit areas, e.g., when audit teams have tested no, or very few, journal entries. * At one firm, the aide memoire includes the different prompts for reviewers to consider at different stage of an audit, e.g., at planning, between planning and year-end fieldwork, at year-end fieldwork and throughout the audit process.

7. Thematic hot reviews

Thematic hot reviews are additional to hot reviews on individual audits. They are shorter, targeted reviews of specific audit areas across multiple ongoing audits. The topics are usually selected based on prior year recurring findings from internal and external quality inspections, developments in accounting and auditing standards, changes in audit methodology or emerging risks to audit quality.

Thematic hot reviews allow firms to review specific audit areas in greater depth on a larger population of ongoing audits, to identify emerging and thematic weaknesses in these areas so that remediating actions can be taken on a timely basis. They may also allow firms to assess the user-friendliness of specific new audit work programmes, and provide targeted support on specific audit areas to ensure work programmes are being used appropriately.

Observations: Only three firms currently have a thematic hot review programme, including one firm who is currently starting its first year of thematic hot reviews covering risk assessment and impairment. The thematic hot review programmes at the other two firms are more established, covering topics on revenue recognition, accounting estimates, audit of cash and cashflow statements, group audits and new accounting standards (IFRS 9, IFRS 15).

At one of the three firms, more emphasis is placed on performing thematic hot reviews than individual audits. This is reflected in the higher number of thematic hot reviews performed, compared to individual audit hot reviews.

At one firm, thematic reviews have been introduced in the 2023/24 inspection cycle.

8. Measuring the effectiveness of hot review programmes

Why is measuring effectiveness of hot review programme important?

Firms should determine whether hot reviews are achieving the desired purpose and objectives. This allows firms to identify areas of improvement and enhancement to help calibrate the process to ensure that their hot review programmes are robust and fit for purpose.

Where a firm's hot review programme has been identified as a response to its quality risks, this programme will need to be monitored as part of ISQM (UK) 1 to ensure it effectively addresses the relevant risks.

The most common ways firms use in measuring the effectiveness of their hot review programmes are:

a) Internal and external file inspections

An internal or external file inspection is undertaken after the audit is signed off. Therefore, if an effective hot review was undertaken, significant quality matters should have been resolved before the audit was signed off and should not be identified in the internal or external inspection, if the inspection and hot review cover the same audit areas. Analysing internal and external file inspection results for audits that have undergone hot reviews is therefore one of the most direct ways to assess the effectiveness of the hot review process.

Observations: All firms assess the outcomes of external and internal file inspections for the audits where hot reviews were undertaken during the year.

At two firms, the role of the hot review is specifically considered in the RCA processes, if the audit is subject to RCA due to an adverse file inspection result, in order to understand why findings were not identified and remediated during the hot review.

Good practice: At one firm, if the audit had a hot review and was then inspected externally and/or internally, the relevant reviewers undertake a learning and de-brief process. This includes considering whether the inspection findings were within the scope of the hot review, whether the hot reviewer had identified the same or similar observations, and the extent to which the audit team had addressed the hot review comments. The reviewers are required to document the learning points in a standard template for review by the Director heading up the hot review function.

b) Feedback from engagement teams and leaders

Feedback on the hot review process can help measure its effectiveness. This should cover the design of the hot review process and performance of the reviewers, including if the review felt robust and if the reviewers provided constructive challenge and were appropriately supportive. This feedback may be used in the year-end performance assessment of the reviewers. Care is needed to ensure this is sensibly considered and does not compromise reviewers' independence when performing reviews.

Observations: At six firms, reviewers are expected to obtain verbal or written feedback from engagement teams. At one firm, a standard survey is issued to engagement teams to ask whether they think the hot review improved the quality of the audit reviewed.

c) Sample check of completed hot reviews

Review of a sample of completed hot reviews, by experienced reviewers independent of the reviews selected, can evaluate the quality of hot reviews undertaken and assess if the hot review framework and guidelines were implemented effectively.

Observations: At one firm, the effectiveness of the hot review process is assessed through quality assurance reviews on a sample of completed hot reviews each year by independent and experienced reviewers. The objective is to identify good practice and areas of improvement for individual reviewers and the wider hot review team. The risk function also review a sample of two hot reviews each quarter to check if all hot review comments were appropriately closed before the audit opinion was signed off.

d) Periodic evaluation of the hot review programme

A periodic robust evaluation of the hot review programme, conducted by the leaders heading up the hot review function, can help identify areas where changes or enhancements for the next inspection cycle may be needed to achieve the desired objectives. This process may utilise the outputs of the various mechanisms above, effects of other quality initiatives in place, and the hot review monitoring processes performed throughout the year.

Observations: Two firms conduct annual evaluation of the effectiveness of their hot review programmes, the results of which are reported to a key decision-making body at firms for discussion or views.

Good practice: At one firm, a debrief on the hot review programme is provided to the audit executives on an annual basis. This includes considering the effectiveness of the programme and suggesting any changes to the programme for audit executives' views and consideration.

9. Promoting the hot review process

Hot reviews are less likely to be effective if engagement leaders and teams are not supportive of the role of hot reviewers and do not engage constructively. This can happen if individuals do not embrace the culture of continual improvement and learning from mistakes during a hot review. This may result in engagement leaders ignoring hot review comments, not utilising the resolution and escalation process, and signing off audits without full hot review clearance.

"Tone from the top” is needed to deliver key messages regarding a firm's commitment to implement an effective hot review process to drive audit quality improvements. Audit leadership should support and promote the value of hot reviews throughout their communications to their audit practices.

Leadership need to find relatable ways to explain the hot review process and the support provided by reviewers. Where reviewers are drawn from the audit practice, this can be facilitated by having the secondees champion the hot review process when they return to the audit practice

Observations: At all firms, the role of hot reviewers is promoted in firm-wide communications, and in training sessions/meetings for engagement leaders. This allows the messages on the value of the hot review process, and the behaviours expected of reviewers, engagement team and leaders to be consistently communicated to the audit practice.

Good practices: * The partner heading up the hot review team at one firm visited several key local offices to communicate to them the value of hot reviews. This helped build constructive relationships, promote the role of hot reviewers and enabled better understanding of the challenges experienced by the local offices. * Hot reviewers delivered training to audit staff and partners focused on AQR-in scope audits at one firm. This provided reviewers an opportunity to share their experiences and lessons learnt to promote the value of hot review processes. * A number of hot reviewers are part of the firm's network of individuals who specifically help bridge the gap between audit quality central teams and the audit practice at one firm. Through participation in this network, reviewers have opportunities to promote the role of hot reviewers to audit business units in their team meetings.

Financial Reporting Council 8th Floor 125 London Wall London EC2Y 5AS +44 (0)20 7492 230

Visit our website at www.frc.org.uk

Follow us on Twitter @FRCnews or Linked in.

Footnotes

-

The seven Tier 1 firms in 2022/23 inspection cycle were: BDO LLP, Deloitte LLP, Ernst & Young LLP, Grant Thornton UK LLP, KPMG LLP, Mazars LLP, PricewaterhouseCoopers LLP. Effective from May 2023, Grant Thornton UK LLP is now included within Tier 2. ↩

-

International Standard on Quality Control (UK) 1 (Revised November 2019): Quality Control for Firms that Perform Audits and Reviews of Financial Statements, and Other Assurance and Related Services Engagements (Updated May 2022). ↩

-

The typical categories of audits that the AQR is likely to inspect, with effect from 1 January 2021, are summarised at this link: https://www.frc.org.uk/getattachment/8e03832a-bc57-4044-a490-817d846d69aa/AQR-Scope-of-Retained-Inspection-September-2022.pdf ↩

-

Only applicable to FTSE 350 audited entities. ↩

-

Sectors identified annually to be given priority by the FRC in selecting corporate reports and audits for review. The sectors identified by the FRC for 2023/24 supervisory focus are (i) travel, hospitality and leisure, (ii) retail and personal goods, (iii) construction and materials, and (iv) industrial transportation. ↩

-

The processes within the scope of each of the three key audit phases have been broadly summarised in "What Makes a Good Audit?" published by the FRC in November 2021: https://www.frc.org.uk/getattachment/117a5689-057a-4591-b646-32cd6cd5a70a/What-Makes-a-Good-Audit-15-11-21.pdf ↩

-

Reviewers do not need to check if actions have been evidenced for comments rated as green. ↩↩

-

Clearance is provided if comments rated as red and amber are closed. ↩