The content on this page has been converted from PDF to HTML format using an artificial intelligence (AI) tool as part of our ongoing efforts to improve accessibility and usability of our publications. Note:

- No human verification has been conducted of the converted content.

- While we strive for accuracy errors or omissions may exist.

- This content is provided for informational purposes only and should not be relied upon as a definitive or authoritative source.

- For the official and verified version of the publication, refer to the original PDF document.

If you identify any inaccuracies or have concerns about the content, please contact us at [email protected].

Using technology to enhance audit quality

Introduction

This paper sets out the Financial Reporting Council's (FRC) analysis of responses to our recent consultation, Technological Resources: Using Technology to enhance audit quality. It incorporates discussion of the responses we have received as a direct result of our consultation, as well as discussion of other matters that have arisen throughout additional outreach and engagement with stakeholders.

Our key objective in undertaking this consultation, and subsequent outreach discussions, was to develop an in-depth understanding of the opportunities, challenges and concerns that are arising as a result of the increasing use of technological resources1 in audit. This allows us to consider factors relating to technology when developing future guidance, revising standards, and engaging with international standard setters.

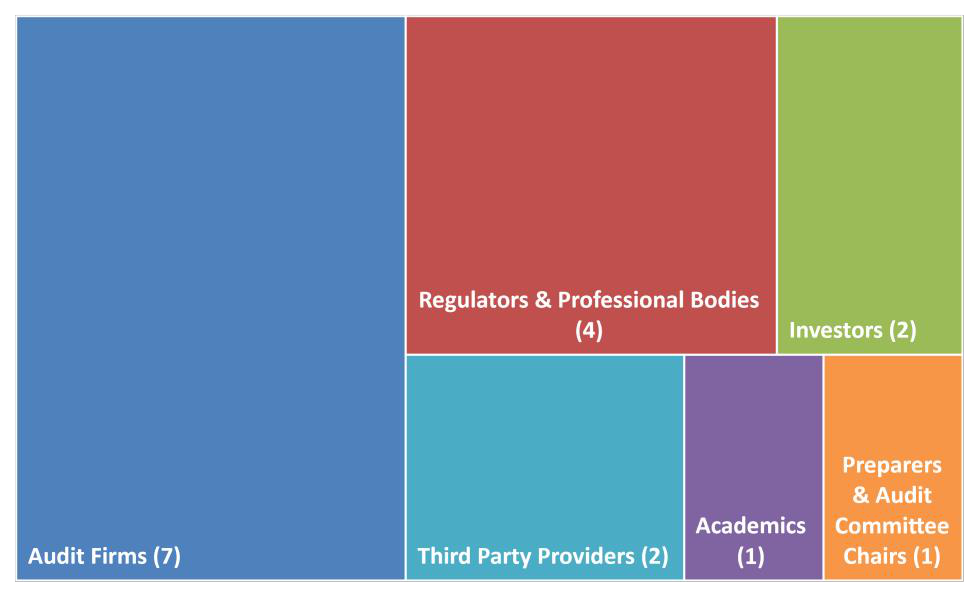

We received 17 replies to our consultation and conducted significant additional outreach, allowing us to develop an understand of a broad range of matters. Respondents included audit firms and professional bodies, as well as investors, third party technology providers and academics.

Almost all those that responded agreed that the use of technology could significantly improve audit quality, when deployed at the right time in the audit process and, crucially, by those with the right training. Respondents also agreed that, whilst additional application material and guidance would be beneficial, the current assurance model and audit standards do not represent a significant impediment to the development and deployment of technology in audit.

Training and skillset were identified as many respondents' primary concerns. A significant majority of respondents saw the recruitment of staff members with the right skillsets alongside the development of appropriate training for current staff (both trainees and experienced), as a priority. Respondents also described significant challenges in accessing high-quality client data in a reliable and consistent format, meaning that the application of technological resources to improve audit quality can be practically challenging to deploy, requiring substantial work on the data itself before analysis can be conducted.

Where we feel we have identified a consensus around a specific action that the FRC can take to address concerns, or where we have determined that no action is currently necessary, we have laid that out within the relevant section in the “FRC Response and Actions" boxes.

Technology, and its use in audit, is increasingly at the forefront of discussions between regulators, standard setters, and audit practitioners. Audit firms and third-party providers have committed significant financial and human resource to developing and deploying technology in audits, leading to a significant increase in its usage. This is reflected, for example, in the revised International Standard on Auditing 315's (ISA (UK) 315)2 inclusion of specific application material which addresses the use of automated tools and techniques.

In March 2020 the FRC's Audit Quality Review (AQR) team published a thematic review, The use of technology in the audit of financial statements, an update to their 2017 thematic review. The use of data analytics in the audit of financial statements. Their most recent review found that the use of automated tools and techniques in the form of audit data analytics (ADA) was routine at the largest UK firms, with significant investment made and implementation occurring as a package with associated methodological changes, training and support. They also found that emerging technologies such as machine learning and other predictive analysis tools, whilst still mostly at the research stage, presented opportunities to improve quality, while also bringing unique challenges for both the firms and regulators.

Similarly, in his December 2019 report Assess, Assure and Inform: Improving Audit Quality and Effectiveness, Sir Donald Brydon included a number of comments and recommendations pertaining to the use of technology in audit and was also interested in the possible links between technology and audit quality. He identified a number of key areas for consideration, including data quality and training.

Sir Donald highlighted, as did the 2020 AQR review, that automating data related audit tasks was already well underway and that its expansion was inevitable. He described how new technology was still most often used in risk assessment process but highlighted that its use is becoming more widespread in other areas of audit, with greater use of ADA in audit testing.

The Competition and Markets Authority (CMA), in its 2018-19 study into the statutory audit market, considered if technology might be a barrier to entry and expansion in the market by challenger firms. The CMA identified that technological resources had increasingly become a differentiator for audit committees when they select audit firms during tender processes, and the Big Four firms appeared to have an advantage compared to other firms in terms of their technological platforms, methodologies, and processes. While challenger firms were also investing in technology this was at a lower absolute level than the Big Four firms' investment. While the CMA did not recommend any specific measures to give challenger firms greater access to technology, it noted that some stakeholders had raised this possibility and suggested, in its final report, that the regulator might wish to consider this further.

Given the increasing significance of technological resources in audit, a key challenge is to ensure that auditing standards remain fit for purpose, with requirements that ensure the delivery of high-quality audit, while not restricting the development and deployment of technological resources that can improve quality.

With that in mind, we launched our consultation to aid in identifying the practical steps that we, as the UK's audit regulator, can take to support the use of technology in improving audit quality, and to help inform our engagement with international standard setters. In addition to receiving formal written responses to our consultation, we conduced significant additional outreach in the form of roundtables and one to one discussions to ensure that we considered as wide a range of range of stakeholder views as possible. Our consultation received 17 replies, all of which are available in full on the FRC's Website, from a diverse range of stakeholders.

Our response below is structured around key themes, both those originally included within our consultation and those that have emerged though on-going outreach and discussion with stakeholders. Artificial Intelligence (AI) and Machine Learning (ML) were widely discussed topics, so given their prominence, and the oftentimes unique opportunities and challenges those technologies present, we have laid out our discussion of AI and ML usage separately within each section.

Given the almost constantly evolving landscape in this area, conversations about the relationship between technology and audit are on-going. Our response captures current thinking on the present and future of technology in audit, but further discussion is still required in many areas. We believe that our response below will serve as a foundation for discussions about the role we as a regulator take in relation to the use of technologies recourse in audit, be that enhancing relevant standards, influencing international standard setters, developing guidance on select topics and clearly communicating our expectations regarding the use of technology.

Where we feel we have identified a consensus around a specific action that the FRC can take to address concerns, or where we have determined that no action is currently necessary, we have laid that out within the relevant section in the “FRC Response and Actions" boxes.

Technology and Audit Quality

A clear theme arising from all responses was the belief that the increasing use of technology, including advanced tools such as AI, could enhance quality over and above efficiency gains, provided that the technology is used at the right time, in the right place, and by individuals who are appropriately trained to use such tools.

The following were areas in which respondents believed the use of technological resources was most likely to enhance quality:

- Reduced detection risk where automated tools and techniques are used to sample populations in a more representative way.

- Better understanding of the client's operations and controls, leading to a more sophisticated, focused, and capable risk assessment process.

- Time freed up on routine tasks, allowing auditors to focus their attention on more judgmental areas.

- Improved connectivity with audited entities, allowing for easier tracking of information requested and provided and questions raised, allowing for an earlier identification of delays.

- Use of predictive analysis to test complex areas such as financial instruments, where automated tools and techniques are suited to testing the complex models and assumptions.

Two respondents raised the idea of using ADA to test 100% of a population rather than selecting a sample to test. However, this is not how the FRC has seen these tools currently being used. In practice, automated tools and techniques are used not to test 100% of a population, but to more appropriately analyse an entire population so that any subsequent sampling can be more representative and statistically appropriate.

Technological Resources and Auditing Standards

Most respondents did not believe that the current auditing standards, or assurance model, created a significant obstacle to technological innovation. No respondents identified any specific objective or requirement in the auditing standards that caused particular problems. However, most felt that the auditing standards could do more to promote or facilitate technological innovation, including more references and material relating to the use of automated tools and techniques and ADA.

ISA (UK) 315 demonstrates that more material relating to the use of technology is already being included in the auditing standards, reflecting the increasing importance of technology in delivering quality audits.

Recent revisions to ISA (UK) 315 highlight that it is more than possible to adapt the current assurance model to the ever-evolving technological landscape, recognising that technology is increasingly at the forefront of audit.

One audit firm also discussed International Standard on Quality Management 1 (ISQM 1) Quality Management for Firms that Perform Audits or Reviews of Financial Statements, or Other Assurance or Related Services Engagements, in their response, which the FRC will be consulting on in 2020/21. They believed that the material in ISQM 1, particularly that around increasing firm leadership and accountability, provides a good framework on which to consider technology and how it is integrated into audit firm methodologies and quality control systems, helping to ensure that technology is deployed appropriately, and in line with the firms governance procedures.

Dealing with potential exceptions when using ADA

A number of replies highlighted that many auditors face challenges with the large number of potential exceptions generated when using ADA. Most however agreed that the cause of this was most often an initial inappropriate scoping of the tool, and that with a proper understanding of the parameters, exceptions generated are not unmanageable. Indeed, five respondents did not see this as a negative, believing it allows the auditor to gain a better, more detailed understanding of both the data and their own tools in the process of refining their assumptions.

Thoughtful application of the terminology of the auditing standards, particularly the use of the term “exception”, is important here in designing audit procedures that are both effective and efficient. In using ADA to understand and assess the population being analysed, parameters may require re-calibration after initial analyse, to ensure the tool is correctly identifying outliers that merit further investigation as exceptions.

This situation is analogous to the development of an expectation when conducting analytical procedures in line with ISA (UK) 520 Analytical Procedures. ISA (UK) 5204 requires that the auditor “develops an expectation of recorded amounts or ratios and evaluate whether the expectation is sufficiently precise to identify a misstatement”. It is often the case that the first expectation built is insufficiently precise, and that subsequent refinement is required as the auditor develops a stronger understanding, before the expectation is suitable for comparison to the actual and is used to generate audit evidence.

One reply did caution that auditors should be aware of a potential bias, in most cases unconscious, towards adjusting thresholds to generate the number of potential exceptions they expect to see, or perhaps have allocated time to deal with.

An issue raised by a number of respondents relates to data quality, and that large numbers of potential exceptions can be generated where the data used is of poor quality to being with, causing the ADA to produce a large number of “false” exceptions. Respondents here linked their comments to responses addressing data quality, indicating that a common data model, or approach to data access, may be helpful in limiting these initial "false" exceptions.

Documenting the use of Technological Resources

Most respondents, as detailed above, agreed that the auditing standards did not inhibit the use of technological resources, but some suggested it would be beneficial if the auditing standards recognised the complexity of transitioning from more traditional auditing techniques to approaches making greater use of ADA.

A majority of respondents agreed that the increased use of automated tools and techniques brought documentation challenges, including concerns that auditors don't clearly document how technology has been deployed, and crucially, why it was deemed suitable for use in any particular area of the audit file. Some respondents commented that this may be a greater risk where the use of ADA is centrally mandated in certain areas of the audit, potentially leading to its inappropriate deployment.

One respondent however, believed that, whilst there are challenges, the use of automated tools and techniques could make documentation easier. As long as due consideration is given to the suitability of any particular technique this respondent suggested that automated tools can be utilised to provide more consistent and detailed documentation.

AI, ML and Audit Documentation

Three respondents to our consultation highlighted the particular challenges that exist when documenting the use of AI in the audit process, given the iterative nature of the algorithms deployed and the difficulty in explaining how AI has been used to help make a decision, for example deciding to test certain items as a result of AI tools having identified those items as higher risk.

One respondent identified a potential solution, discussed widely in the AI community, which is to ensure that AI tools used in audit take a "white-box” approach, rather than a “black-box" approach, thereby creating more understandable, and thus explainable, models. “Black-box” AI tools have observable inputs and outputs but lack clarity on the inner workings of the tool, it may identify an item as riskier but there isn't an easily discernible “how” or “why” in the process. “White-box” tools on the other hand have observable inputs and outputs but also understandable behaviours and relationships in arriving at those outputs, though sometimes at the cost of lower accuracy. There may therefore be something of a trade-off between accuracy, understandability, explainability, and the ease with which a tool can be documented

A great deal more research and discussion is needed in this area but it is likely that when deployed in audits “white-box” AI tools will be easier to explain, and thus likely easier to document in accordance with the ISAs, than "black-box" AI tools.

Regulatory Expectations on the use of Technological Resources

As mentioned in the initial consultation, we recognise that, as the UK audit regulator, we have a role in setting clear expectations for the appropriate development and use of technology. We should also be consistent in our approach to regulating the use of technology, including consistency when discussing the use of technological resources with stakeholders.

Additionally, a number of respondents expressed concerns about explaining the tools they used, both in sufficient detail within an audit file to meet ISA (UK) requirements, but also in understanding what FRC inspectors' expectations regarding documentation of the use of technological resources are when audit files are reviewed. A lack of understanding of regulatory expectations can serve as a barrier to the adoption of tools which may audit in improving audit quality.

FRC Response and Actions

The FRC will develop, with the assistance of the newly reformed Audit Technical Advisory Group (TAG), guidance for auditors to aid in dealing with the volume of potential exceptions generated when using ADA, highlighting best practice and key documentation considerations.

The FRC will develop internal guidelines to assist staff in their engagement with stakeholders, setting out our expectations in relation to the use of technological resources in audit, including for example what we should reasonably expect an auditor to be able to explain concerning how technology, including AI, was deployed in an audit. We will develop these guidelines with the support of the Information Commissioners Office (ICO) and the Alan Turing Institute, using some of the key ideas and themes identified in their recent joint report Explaining Decisions made with AI. If suitable, this may be developed into external guidance at a later stage.

Training for current and future auditors

Perhaps the most significant area of agreement amongst respondents was that auditors, both trainee and experienced, are not always receiving appropriate training in technology. Training current staff members, and the difficulties of hiring staff with suitable qualifications, were identified as major challenges by most respondents.

There is a distinction between the training that junior auditors receive as part of their professional qualifications and the training received within the audit firms. Most felt that the training received during professional qualifications did not meet the needs of the modern auditor and believed much greater effort could be made by exam providers to ensure these qualifications prepare auditors for the use of technology.

An additional challenge is training more senior staff in new technologies, with trainers occasionally encountering difficulty in convincing them of technology's value in enhancing audit quality, whilst some staff members are concerned that technology will replace them. Audit firms should be transparent about their intentions when deploying technological resources in audit and communicate effectively with staff to allow them to understand the firms ultimate goal in deploying technological resources. Technological resources are tools, which still need people to develop and deploy them, and so in many instances, they are not a direct replacement for trained auditors but instead may facilitate judgements and decision making.

Future training for auditors is likely to be very different to training that is currently provided. In his report, Sir Donald noted6 that it is unlikely that future assurance providers will always be accountants who have taken the traditional training route; the skills of an auditor differ to those of an accountant, and there is an argument that someone providing assurance on, for example, Environmental, Social and Corporate Governance (ESG) disclosures, or on a company's Cyber security measures, does not need to be an accountant. The demand is not usually for auditors to also become data scientists and AI experts, though firms will of course make use of both these in developing tools but for them to gain an sufficient understanding of the strengths and limitations of technological resources, and an idea of how these tools can best be put into practice, with an understanding of how to mitigate their shortcomings.

FRC Response and Actions

The FRC's role in professional qualifications is limited to reviewing the Recognised Qualifying Bodies (RQBs) audit qualification syllabuses. We will endeavour to consider how technology is addressed there when revising syllabuses, and will make suggestions for improvements where necessary, but we encourage users of this training to discuss any issues they feel are not addressed directly with providers to help ensure future auditors are properly equipped for the modern realities of audit.

The FRC would also like to draw attention to the requirements of ISA (UK) 220 Quality Control for an Audit of Financial Statements (Revised November 2019), particularly paragraph 14, which requires that engagement partners are satisfied that the engagement team collectively have the “appropriate competence and capabilities” to perform an audit engagement in accordance with professional standards. If it is identified that members of the engagement team do not have appropriate capabilities to deploy the technological resources being planned for use in an audit, there is an obligation to ensure the requirements of the standard are met. This could be achieved, for example, through communicating with the firm to ensure appropriately skilled personnel are assigned to the engagement team, or that additional training is provided where appropriate.

Challenger Firms and Technological Resources

Both the CMA and Sir Donald Brydon discussed technological resources and challenger firms, with Sir Donald noting "very different levels of investment between the Big 4 and other firms, both “in the form of task-specific software to perform assessment of acceptance/continuance of an audit engagement as well as more comprehensive audit documentation platforms supporting the design and execution of the audit process”8

We received a very mixed response regarding this topic, with replies divided into three broad categories as to the extent to which they perceived challenger firms to be disadvantaged regarding their technological capabilities:

- Those who believed that challenger firms are at a significant disadvantage owing mostly to a lack of investment, either in-house or in third-party tools, and a potential inability to recruit and train staff, and believed that even with significant investment, it would be difficult for challenger firms to develop significant capability in this area.

- Those who believed that whilst there was some disadvantage to challengers, a large proportion of that disadvantage could be overcome with available tools, potentially purchased rather than developed in-house if necessary. In this group some discussed that idea the fact that the tools are not bespoke may mean that there are some instances where a purchased “off the shelf” solution is not as capable as a specific tool that has been designed in-house and closely integrated into the audit firms methodology.

- A small group who perceived no disadvantage for challengers and that it was simply their choice not to invest and engage more with technological solutions.

A majority of respondents did believe that challengers firms are disadvantaged to some degree, either quite significantly or only in some areas. Some of the potential disadvantages suggested included:

- The availability of data in a useable form. Whilst this was identified as an issue for larger firms as well, challenger firms may have less resource available, and may not have the appropriate tools, to undertake significant data transformation prior to making use of ADA.

- A lack of understanding of what is considered best practice. Some challenger firms, in their responses, felt that larger firms had a better understanding, owing both to their investment and discussions with regulators, of what constitutes best practice in the use of technology and were thus more comfortable in its use.

Two respondents felt that there was little, sometimes even no, disadvantage given the range of third-party tools available for firms not developing in-house solutions and that any disadvantage was a result of a firm choosing to not look to technological resources to supplement their audit methodologies.

Many respondents believed however that any disadvantage should be considered to be less about the availability of tools and more about a lack of in-house knowledge and support. Smaller firms may be able to purchase tools, but they may not be as equipped to build these tools into their methodologies effectively, to understand how these tools might best be deployed, and may lack the firm-wide architecture required to effectively make use of them.

Access to Quality Data

The overwhelming majority of respondents supported the idea of a data standard, or a common data model, believing it would be beneficial to the audit market, increasing data quality and facilitating more efficient transfer of data between auditors and companies. Many also saw a common data standard as providing a ‘backbone' on which to develop and build new technologies. Some respondents also suggested that, in the world of iXBRL and open source banking, common data models increasingly make sense and would not be incongruous to modern auditors.

A number of potential subsidiary benefits were also raised by respondents, including that a common data model may reduce switching costs for companies when moving from one auditor to another, potentially acting as a form of market opening measure where switching costs driven by data handling are a significant factor in audit tenders. This is likely to be of increased relevance for Public Interest Entity (PIE) auditors where mandatory rotation occurs.

A few respondents however suggested that a common data model was not necessary, especially for larger firms which have created their own in-house global models to facilitate work on complex groups or those making use of existing data models such as the one issued by the American Institute of Certified Public Accountants (AICPA). These respondents also discussed how an additional UK data standard, focused solely on the provision of audit services, would likely be too narrow a solution. In our outreach, discussion with key stakeholders indicated a popular belief that a cross-regulatory approach to data standards, considering data access concerns not just for auditors, but for a wider range of parties, including other professional service providers and the legal profession, is likely to be of substantially greater benefit.

Additionally, Sir Donald Brydon identified in his review10 a potential reluctance from companies to permit access to large amounts of their data, citing General Data Protection Regulations (GDPR) and wider data security issues. Any solution to data access issues will need to address these concerns.

Respondents also felt that effort would be better focused on ensuring emerging finance technologies (such as cloud based financial systems) have appropriate and consistent data access features. In this approach, the focus would be on common access features as opposed to commonality in the data formatting itself. Application Program Interface (API) solutions were discussed by five respondents, identifying it as a potential route to consistent access features.

APIs define interactions between different pieces of computer software. In this model the solution to access would be for different pieces of software to have established methods of communication between them, even though they have different systems and methods for organising data. This is the solution adopted by Her Majesty's Revenue and Customs (HMRC) in Making Tax Digital. The first stage of the development required certain companies to submit Value Added Tax (VAT) returns entirely digitally; but the issue was that companies use many different pieces of accounting software, which produced VAT information in a variety of different, and often inconsistent, formats.

HMRC made use of API in their solution, since it meant that no matter which accounting program the VAT information was held within, it could be communicated in a consistent manner to HMRC. Software providers have created many products to integrate with the HRMC solution, from be-spoke software, fully-fledged off the shelf packages and simple bridging solutions to allows users of older software to still be able to meet their VAT return deadlines. This is a strong indicator that a market-led solution could be a potential solution to data access issues.

The FRC has already engaged extensively, and will continue to do so, with the multiple providers in the market who are developing solutions to data access concerns.

Finally, six respondents raised retention of datasets used in ADA as a major concern, believing there was a lack of clarity around what is a reasonable retention period. Discussion with audit firms indicated a variety in practice, with some retaining data sets only for short periods, while others retained data sets for much longer periods.

FRC Response and Actions

The FRC's view is that the retention period for datasets is a matter of professional judgment, though auditors should be mindful in making this judgment, that documentation is sufficient to meet the requirements of ISA (UK) 230 (Update January 2020) Audit Documentation and any applicable legal requirements regarding minimum data retention periods are met.

The FRC believes that producing a data standard for the UK alone would not be beneficial when a number of solutions already exist, some with an international reach. As discussed above, developing a standard solely relating to the provision of audit services is also unlikely to be a successful route. A market-led approach, taking account of access concerns for a wider range of stakeholders, including broader financial services providers and the legal profession, is likely to be more beneficial and better able to respond to the needs of users efficiently and appropriately.

The FRC will however continue to monitor the market, and we will also continue our discussions with providers of market-led solutions, to understand how potential solutions operate and how the FRC might best be placed to input into this process in the future, if this should become necessary.

Ethics, Technology and Audit

The most significant ethical implications arising from the greater use of technology, identified by a majority of respondents, were the threats, both actual and perceived, to auditor independence.

Sir Donald Brydon highlighted this in his review, noting that technological developments may “create a new opportunity for confusion between audit and business service", leading to a blurring of the relatively well-established line between audit and non-audit services.

A significant concern, raised by a number of respondents related to not just the sharing of business insights, but potentially of technology itself. There are significant ethical concerns if, for example, the auditor provides analytical tools to the company or provides consultancy services related to the design of analytical tools and systems.

The FRC's Ethical Standard contains provisions relating to the design of IT systems12 which are relevant, though respondents felt greater guidance relating specifically to emerging technologies would be beneficial.

Respondents also identified a number of concerns which, whilst not generally within the scope of the ethical standard, represented wider ethical concerns about the expanded use of technology.

The most common was the perceived “de-skilling" of auditors if technology is seen as a direct replacement. As discussed above, technology will still need operators, and whilst the nature of audit may change, automated tools and techniques are just another set of tools and are not capable of making the sophisticated professional judgments that are required of an auditor.

Respondents generally agreed that the increasing interrogation of a company's dataset by the auditor may lead to an increased risk of providing business insights which go beyond what might typically be included in a management letter and instead represents something akin to a business advice service. One respondent commented that there was a risk of "blurring the line" between being the auditor and the adviser.

It is clear why this may become a wider risk; predictive analysis of the likelihood of default by a debtor, for example, may provide valuable audit evidence but would also be of significant interest to credit control staff as they seek to recover debt and make judgments about how credit terms may need to be adjusted. A concern was that this provision of wider insights may occur almost inadvertently. For example, it may be unlikely an audit firm would provide a detailed report to management in their first year of using ADA but they might, over a number of years, provide a little more each year such that over time, there is a slow 'slip' into providing more detailed insight than is appropriate.

Respondents indicated that generally speaking, the current Ethical Standards restrictions on non-audit services and the position on non-involvement in management decision-taking13 are a strong starting point to mitigate against the threat described above, but that material explicitly dealing with insights generated from the use of data analytics would be beneficial, both to audit firms, and to help clients understand why they should not expect detailed, business insight-focused analysis from their auditors.

AI, ML and Professional Ethics

The use of AI tools presents unique ethical challenges, over and above the more general use of technological resources in audit. We are discussing here ethical issues in the context of audit and the relevant ethical standards and codes, for example where the use of AI tools may give rise to a threat to an auditor's objectivity and independence, rather than wider ethical, or moral, concerns about the use of AI tools in decision making processes.

One concern relates to the sale or licensing of AI tools to clients. Many audit tools analyse data and provide insight in a way that could be useful in making business critical decisions, and audit firms may wish to make commercial use of this by selling or licensing tools to audit clients. Whilst there is no outright prohibition on auditors providing information technology services, except where the systems concerned would be important to any significant part of the accounting or financial management system, the FRC Ethical Standard sections 5.47 to 5.50 need to be carefully considered when auditors are considering selling AI, or any other analytical tool, to audit clients.

Third-Party Technology Providers and Audit

Reactions to the increasing usage of third-party providers were mixed, with some respondents believing that the increasing use of third-party providers presents a range of ethical, documentation and practical challenges. Others felt that these concerns were less pressing and that, in some instances, the use of third-party providers may alleviate concerns.

One of the primary concerns identified was a potential lack of clarity around who the third-party providers' relationships are with: the audit firm, the client or potentially both depending on the arrangement. Where the relationship is unclear there is potentially an increased risk of inadvertent breaches of auditing and ethical standards. A number of respondents raised that the lack of regulation around third-party providers was an area of concern. Auditors, and their methodologies, are subject to a wide range of standards and regulation, which third-party providers are not, leading to some respondents suggesting that this could create issues both with assurance around the quality and operation of third-party provider tools, and with regulators unable to take action against third-party providers should it be required.

AI, ML and Third-Party Providers

Linked to the above points about the explainability of AI, two respondents identified an additional concern for challenger firms who have purchased technological solutions from third-party providers.

When a firm has developed an ADA or AI tool themselves, they face changes in explaining and documenting its use, but this challenge is even greater when the tool was not developed in house as there is an additional step in the process to understand the tool. This is again, more challenging when AI or ML tools are used owing to the sometimes-increased difficulty in understanding the tools function.

As such, challenger firms need to give consideration as to how they document the use of AI tools as they must first ensure they understand tools sufficiently to be able to deploy them in audit.

When making use of third-party tools, audit trails are vital, and auditors should ensure that they are able to obtain from providers clear explanations of a tools function, including how it manipulates input data to generate insights, so that they are able to document an audit trail as robust as one that could be created with any internally developed tool.

Respondents identified a number of possible safeguards, or actions that could be taken, to help alleviate these concerns:

- Expansion of the parties considered to be within the audit team to include those third parties providing ADA and support, such that they would be subject to the same regulations as auditors. This would be a significant step, requiring a fundamental re-consideration of the nature of audit and potentially overlaps with a number of Sir Donald Brydon's recommendations.

- Inclusion of third-party providers within the scope of ISA (UK) 62015, such that providers would be subject to the same considerations as the use of experts, such as those providing valuation services. This may be challenging as providers of services such as audit software do not currently fall within the remit of ISA (UK) 620; the standard is targeted towards the use of experts at the engagement level.

- Implementation of a requirement for third-party technology providers to have some form of assurance over their own technology and systems, similar to a Type 1 or Type 2 controls report or to be subject to one, or a number of, the standards issued by the International Organisation for Standardisation, or ISO). ISO 27001 and ISO 200001

Some respondents discussed the valuable role which third-parties have in aiding competition by providing challenger firms access to technologies which may be too costly to develop in house. Some also argued that their role was not new and that third-party providers have been present in the audit market for some time, often as providers of methodologies, and that some of the challenges raised in our consultation are not new.

Glossary

| Term | Reference | Definition |

|---|---|---|

| Technological Resources | ISA 220 (Revised 2020), paragraphs A63 – A67 | Umbrella term for technology that assists the auditor performing risk assessment procedures, obtaining audit evidence and / or managing the audit process. |

| Automated Tools and Techniques | ISA (UK) 315 (Revised July 2020), paragraph A21 onwards. | Technology used to perform risk assessment procedures and / or obtain audit evidence. A subset of technological resources. |

| Audit data analytics (ADA) | As used in AQR's 2020 review, taken from the IAASB Data Analytics Working Group's Request for Input dated September 2016 | A subset of Automated Tools and Techniques. |

| "The science and art of discovering and analysing patterns, deviations and inconsistencies and identifying anomalies, and extracting other useful information in data underlying or related to the subject of an audit through analysis, modelling and visualisation for the purpose of planning or performing the audit." For the purposes of this review, an ADA or ADAs are data analytic techniques that can be used to perform risk assessment, tests of controls, substantive procedures (that is tests of details or substantive analytical procedures) or concluding audit procedures. | ||

| For clarity, we do not use the term ADA to refer to automated tools and techniques that involve the use of artificial intelligence (AI) or machine learning (ML). |

Footnotes

-

Terms in bold are defined in full in the glossary. ↩

-

ISA (UK) 315 (Revised July 2020) Identifying and Assessing the Risks of Material Misstatement Through Understanding of the Entity and Its Environment ↩

-

Including analysis of responses to questions 1, 3, 4, 6, 11 and 12 of the FRC consultation. ↩

-

ISA (UK) 520 Analytical Procedures, Paragraph 5(c) ↩

-

Including analysis of responses to question 5 of the FRC consultation. ↩

-

Sir Donald Brydon review, paragraphs 6.0.10 - 6.1.4 ↩

-

Including analysis of responses to question 2 of the FRC consultation. ↩

-

Sir Donald Brydon Review Paragraph 24.0.1 ↩

-

Including analysis of responses to question 9 of the FRC consultation, ↩

-

Sir Donald Brydon Review, Paragraph 24.1.11 ↩

-

Including analysis of responses to questions 8 and 10 of the FRC consultation. ↩

-

FRC Ethical Standard 2019, Paragraphs 5.47 – 5.50 ↩

-

FRC Ethical Standard 2019, Paragraphs 1.23 – 1.25 ↩

-

Including analysis of responses to questions 13 and 14 of the FRC consultation. ↩

-

ISA (UK) 620 (Revised November 2019) Using the work of an auditor's expert ↩